Listen now

In my previous blog posts (Operationalizing Generative AI part 1 | part 2 ) I discussed the use of Large Language Models (LLM) in NGP Capital. This time my focus is on LLM agents, which provide useful complementary capabilities to LLMs but will also pave the way to the next generation of self-learning software systems. For startup founders, agents provide a way to integrate the general knowledge and reasoning capabilities of LLMs with their products, create applications that surpass capabilities of what has been possible earlier, and even tackle use cases that would have been impossible earlier.

If you’ve ever tried to use a Large Language Model for a complex mathematical problem, you’ve likely noticed that they tend to fail miserably. This is often interpreted as proof of the innate stupidness of LLMs, as they only don’t find the correct result, but worse, don’t seem to acknowledge that they are in the wrong. However, to understand why this is happening, we need to split the problem into two phases:

- Reasoning: the process of understanding how in principle to solve the problem

- Arithmetics: the actual numerical operations related to solving the problem

When we ask a model to be verbal in its thinking, we can see that a good model generally excels in reasoning (1) - it tends to come up with the correct thought process. However, the failure is caused by a lack of performance in arithmetics (2). This initially feels surprising, as simple arithmetics seem like a trivial problem. Keeping in mind that LLMs are ultimately text prediction algorithms, we can understand the root cause of the problem: an LLM as such is not equipped to do calculations. Luckily for us as users of LLMs, this problem is starting to be solved.

The solution is to equip LLMs with tools - in this specific case, we would like to give the LLM access to a calculator. Interestingly, the performance of LLMs with such problems is quite similar to humans. Without a calculator, I might be able to come up with a reasonable sounding but ultimately incorrect answer, but equipped with a calculator and with some background with mathematics, solving such problems will again become trivial, as they should be. In parallel, we should keep in mind that being able to reason (1) how the problem is solved is much more impressive feat than the arithmetic part (2), which was in principle already solved by Charles Babbage and Ada Lovelace in the 19th century.

Equipping LLMs with tooling brings us to the world of LLM agents. In the next section, we’ll drill down to the definition of an LLM agent, as well as their common characteristics and use cases. To illustrate where the market is heading, The Information reported on 7th February that OpenAI is currently investing heavily to LLM agent development. Luckily for startups working on software based on LLM agents, the potential scope is very wide. Most implementations of LLM agents will need to work within a enterprise perimeter defined by technical, organizational, and processual information security measures. This means we don’t expect the OpenAI tooling to replace internal or 3rd party agent development efforts.

What is an LLM agent?

An LLM agent is an autonomous goal-driven system that uses an LLM as its central reasoning engine. This allows the LLM to focus on tasks that it’s best suited to, and use other resources when other tools are better suited. These tools include the calculator discussed above, but other samples include for example a Python REPL (programming console), Google Search and AWS Lambda function runner. It’s important to keep in mind that these are just a few examples of out-of-the-box alternatives available e.g. in LangChain, whereas in practice developers can develop their tools when one doesn’t exist yet, and the scope of those tools is practically unlimited.

Looking into possible use cases for LLM agents, we can find LLMs built for at least two types of needs: there are agents built for conversational use, as well as task-oriented agents that work on inputs and outputs, and can for example autonomously interact with external APIs both as resources as well as to execute outputs of the process.

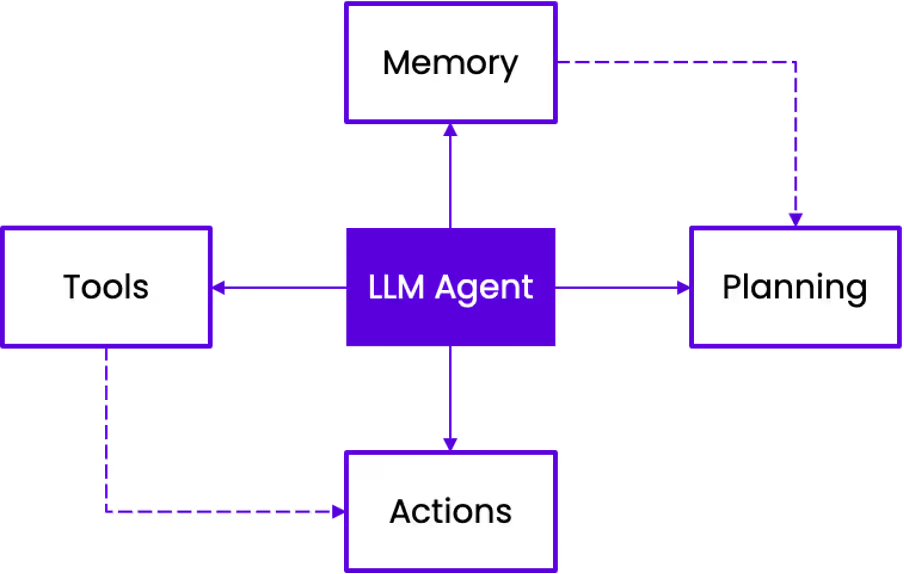

A blog post by Lilian Weng, Head of Safety Systems at OpenAI at the time of writing, describes the key components of an LLM agent. For this write-up, we’ll simplify the overview a bit and focus only on the roles of the components within an LLM agent system. For a deeper dive to possible approaches for each, reading the blog post is highly recommended.

The core of an LLM agent is the Planning module. It will need to be aware of all resources in its use, and be able to understand when those resources could be used. The current baseline for LLM planning is called Chain-of-Thought. Instead of just asking the model what to do, it’s prompted to think step-by-step, which helps the model better decompose complex tasks to smaller, simpler and more atomic steps. This can be further improved with self-reflection, where the model has access to its past actions and can correct its previous mistakes. This is depicted by the dotted line from memory to planning in Chart 1.

We already covered some examples of Tools above, so we can focus here on the principles. In general, tools should be modular and atomic, making them fit well with steps of the Chain-of-Thought process discussed above. Equipping an LLM agent with external tools should also always significantly extend its capabilities. For a software developer, it can be most practical to think of tools as specialized external APIs. Whether or not they specifically conform to a REST API structure or similar, their role towards the LLM agent is similar to the role of an external API to an application. Tools can be either neural (utilizing a neural network model) or symbolic (e.g. calculator as discussed above).

Action is a concept very close to tools. When an LLM agent makes use of a tool, it decides to do an action. The action can 1) be internal to the train of thought of the agent, or 2) external as an output of the process. A conversational agent is typically limited to the former, whereas a task-oriented agent also engages in the latter. In some simple interactions, the agent might also decide not to undertake an action, if it decides that an action would not bring additional value to the reasoning.

Simplest LLM agents can function without Memory, but nearly every practical implementation will require some degree of memory. Used in the context of agents, memory means much more than Retrieval Augmented Generation (RAG), which is a favored technique of augmenting LLMs with enterprise knowledge. Memory in the context of agents comes in two varieties: short-term and long-term. Short-term refers to the train of thought for answering a single question that typically fits within the context window of an LLM, whereas long-term memory is a more persistent logbook of events between the agent and the user, persisted to an external storage.

Sample use case – Semantic search agent

I previously wrote about our semantic search LLM use case at NGP Capital. Semantic search is based on meaning, distinguished from lexical search which focuses on the exact words used. I won’t dive deeper into the concepts of semantic search (such as embeddings) in this write, but the use case below should be readable without a granular understanding of the concepts. For more context and a deeper dive into semantic search, please refer to the previous blog post.

Something that has become clear with semantic search becoming commonplace and novelty value decreasing, is that the problem of applying simple semantic search as a singular tool is often not enough. End users tend to understand search intent more granularly than only as search for the closest semantic match. For example, consider the following search phrase “I want to find companies working with quantum software in Europe, that have raised at least $5M of equity funding”. It can be split to components as follows:

- “companies working with quantum software” is the actual semantic search, where the user expects semantic understanding rather than exact keyword matching

- “in Europe” is a filter, which the user intends to work in a binary way. The user is interested only in companies within Europe, not anywhere else.

- “raised at least $5M of equity funding” is another filter, which needs to be combined with the previous one with “AND” logical operator

Now, if the semantic search is done with the full search phrase, we would only look for the closest embedding matches based on for example cosine similarity of the embedding vectors. The matching would likely favor European companies that have raised more than $5M of equity funding, but there would be no guarantees for such results. Having an understanding of available filters, being able to extract them from a search phrase, and being able to construct the correct search API call with the search phrase and filters correctly applied is something that was nearly impossible in the pre-GPT-world, but now trivial for an LLM agent.

The modern solution is to build an LLM agent with a semantic search tool and a knowledge of available filters. The agent will use its reasoning capabilities to interpret the actual search intent of the user, and decompose it to filters and search phrase. These are sent forward to a vector database for a combined search, with the filters used in a binary way, resulting in a correct evaluation of the user’s search intent.

In practice, this implementation combines LLM agents with Retrieval Augmented Generation (RAG), bringing two buzzwords of AI use together to a coherent solution. For a founder, it’s an example of how to combine the general knowledge and reasoning abilities of an LLM with more specific software tools. The full extent of similar solutions is only limited by imagination, with the part requiring reasoning and judgement delegated to an LLM agent.

Multi-agent collaboration

Now that we understand how an LLM agent works, the question is what comes next? It turns out, multiple agents collaborating, or even a swarm of agents, often perform better than single agents, and are able to solve problems that single agents cannot. This is, again quite surprisingly, analogous to teamwork. Different agents can take different roles, and they can interact with each other in a collaborative or competitive manner.

Thinking of sample use cases for multi-agent interaction is easy. One practical example is clinical decision support for healthcare. By employing multiple LLM agents as specialists of various medical fields (e.g. cardiology, radiology, oncology), and having one LLM agent to coordinate the diagnostic work, it could be possible to achieve better results than a vanilla LLM could. The thinking is based on priming the specialist agents to approach the case from a certain angle. This gives them more focus, capability and understanding within their own specialization, without the need to consider every potential angle to the issue at hand. The coordinating agent can then evaluate the outputs in context of each other, and use its more generic approach to decide on the most likely diagnosis and most effective course of action.

What comes next?

We at NGP Capital will naturally continue our development of an AI-driven investment platform, and utilize LLM agents in the process whenever it makes sense. LLM Agents are also instrumental in new ways of building software, making LLM agents an interesting investment area by itself, as well as built within products in nearly all market verticals.

A phenomena discussed to some extent is whether LLM agents could augment or replace Robotic Process Automation (RPA). As LLMs are capable of reasoning and can act independently, it seems their ability can surpass RPA across a broader and more generic range of uses. Instead of just automating deterministic tasks, we could potentially expect LLMs to exercise some degree of judgement with relatively generic instructions.

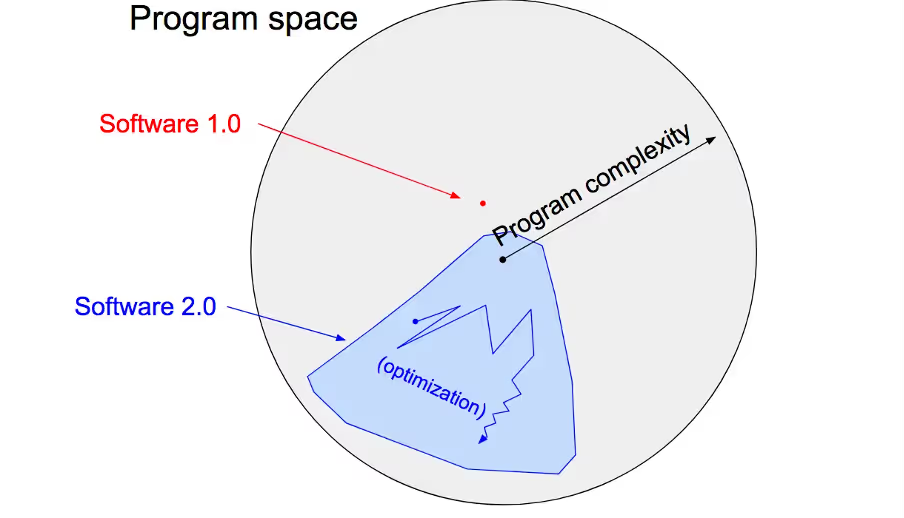

A more encompassing vision is to see LLM agents as an enabler to Software 2.0 as defined by the AI heavyweight Andrej Karpathy here. The Software 2.0 concept is based on the thinking that traditional software (here referred to as Software 1.0) is explicit instructions for a computer written by a programmer. It is a point of instruction for a very specific goal, unable to contribute to or solve even nearly (but not completely) identical problems.

With Software 2.0, artificial intelligence and especially neural networks, are utilized to build intelligent, self-learning systems. Instead of writing the explicit program code, the source code could be a dataset that defines the desirable behavior of the software, and a neural network architecture that serves a a skeleton for the software. The role of LLM agents as an enabler of Software 2.0 is discussed by wiz.ai in this article. In practice, LLM agents can be the command center of Software 2.0, orchestrating the work of different LLMs and their dynamic calls to different tools, embedding the software with a deep understanding of enterprise knowledge.

.svg)

.svg)

.avif)