Listen now

Generative AI, referring to algorithms used to create new content, including text, audio, and video, is the buzzword of 2023. This stems from the observation that output from Generative AI models can often be indistinguishable from human-generated content. As access to Generative AI currently goes mostly through proprietary third-party software, we’ll focus on how it can be operationalized and integrated into other software.

Generative AI is powered by Large Language Models (LLMs), pre-trained models that can often solve a large scope of problems, which provide the starting point to Generative AI for most practitioners. A major difference with the artisanal way of building AI is that LLMs often perform well in so-called “zero-shot” scenarios, referring to situations the model has not encountered in training. Whereas the previous school of AI was used to solve hyper-specific problems, a Generative AI model can power a large downstream of tasks.

Following the launch of ChatGPT in late 2022, Generative AI moved mainstream, and the excitement around it took a new pace. However, what often remains unsaid is that ChatGPT is just a chatbot-like API to an underlying LLM called GPT-3, first released during the summer of 2020. Nevertheless, public access to ChatGPT has led to a lot of hype, which often assumes the model has higher capabilities and sentience than it does. On the other hand, seeing ChatGPT as an exhaustive example of the capabilities of Generative AI has also created a lot of misguided critiques: we have to remember that it is just a showcase of the GPT-3 model. It doesn’t have access to external, up-to-date, often proprietary data, but also works without a more specific understanding of the problem and its context.

In this two-part article series, we’ll take a deep dive into the operationalization of Generative AI and LLMs: how does it differ from the approach of ChatGPT, and what kind of tools are used to deploy models and draw an inference in real-time. In this first part, we’ll set the stage by looking into the history of LLMs and discussing the operational use of LLMs on a conceptual level. In the second part, we’ll look at how the deployment architecture might look in practice and explore a semantic search use case we’ve built at NGP Capital.

Brief History of Large Language Models

Taking a look into history is not important only for academic interest, but also for building a deeper understanding of the field and the possibilities ahead of us.

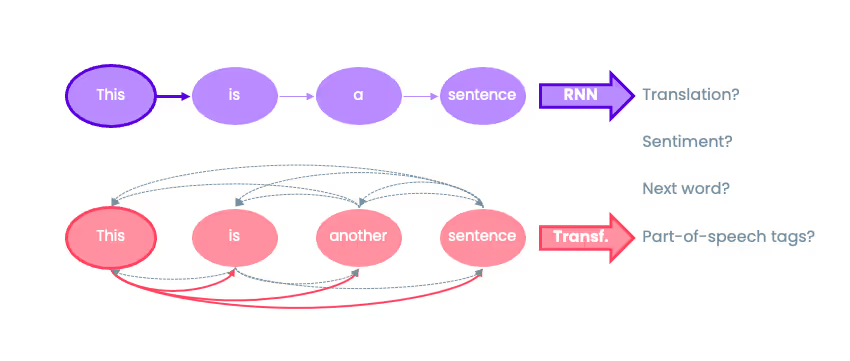

When we talk about ChatGPT and GPT-3, we are referring to a proprietary set of models and interfaces owned by OpenAI, a privately owned research laboratory that now mostly operates for profit. OpenAI has significantly contributed to research in the field, and simultaneously led the popularization of the movement. However, focusing only on OpenAI makes us bypass a longer string of research, stemming from neural networks in general, and more specifically a subset of deep learning models called transformers. Instead of an earlier approach of representing texts one word at a time, transformers are based on an attention mechanism where the importance of all the words are compared to each other.

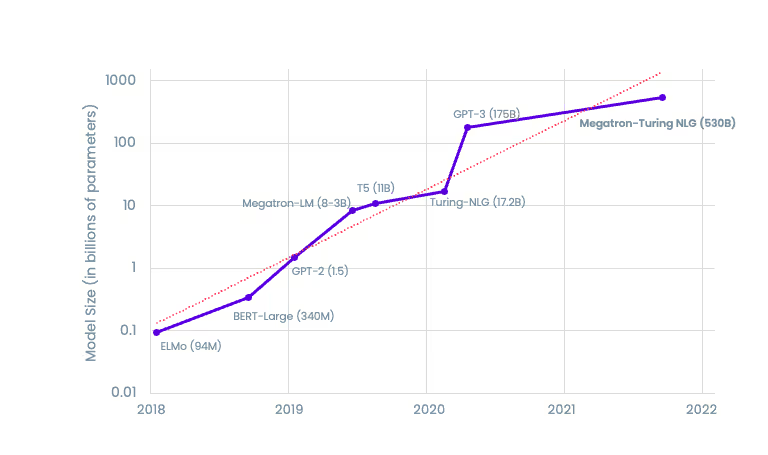

Transformer models were first introduced by a team at Google Brain in 2017. Even more importantly, a transformer model called BERT was open sourced by Google in November 2018. At the same time, other foundational research and models were open sourced, starting an arms race within the industry - still 2-3 years before GPT-3 and its first accomplishments became common knowledge. An interesting characteristic of LLMs seems to be that quantity matters: increasing model complexity and the amount of training data tends to lead to better outcomes. This has lead to comparisons on amount of parameters as a proxy of performance: from 110M parameters of BERT, to 175B parameters of GPT-3 and a whopping estimated 100T (yes, trillion) parameters of upcoming GPT-4. This makes GPT-4 roughly a million times larger than BERT.

Increases in model complexity highlight another characteristic of LLMs: training a model tends to consume a significant amount of compute, which results in high costs, use of electricity and carbon emissions. For example, it is estimated that training GPT-3 consumed 936 MWh of electricity, which equals roughly to the production of a nuclear reactor for one hour. Training LLMs for singular problems doesn’t make sense, but they are planned for recurring use, which also explains why LLMs are often called foundation models. This term highlights the usage of LLMs as a base layer of models, with another set of lighter, context-specific models built on top of them to answer to problems on a more narrow domain. We expect this type of LLM use to be a continuous source of innovation over the next years.

How can LLMs be used in practice?

The interface to LLMs the general public is most familiar with is ChatGPT, a chatbot. In addition to this, some of us might have used tools building on top of LLMs, such as software developers using OpenAI Codex or GitHub Copilot and content creators utilizing Jasper or Midjourney in their work. However, when using LLMs as part of products and software, the interface is most often exposed to world as a well documented REST or GraphQL API. A program sends an input, most often a piece of text to an external service, and the API returns its expected output, whether text, vectors, image or something else.

As an example, in addition to ChatGPT, OpenAI is offering its product as an API. The API supports copywriting, summarization, parsing of unstructured text, text classification and translation, as well as text-to-SQL, text-to-API-calls and code continuation features, which can be embedded to any software that conforms with their terms of use. On the other hand, something that we’ll revisit in part 2, if commercial APIs do not satisfy requirements or are a bad fit for some other reason, open source LLMs can be deployed to private endpoints, bringing similar functionality to a more contained setup.

In the case of OpenAI and other commercial providers, the API is most often offered as a service, typically with a transaction pricing based on count of requests. Pricing model is typically designed for high quantity, with single requests costing only fractions of cents. This has led some observers to assume it’s easier to monetize products built on top of LLMs rather than the LLMs themselves. However, there are now signs that at least OpenAI is taking large strides towards commercializing GPT-3 and ChatGPT.

Some of the most prevalent critiques of ChatGPT, often misguidedly generalized to LLMs in general, are related to the fact that the model version supporting ChatGPT was trained during the summer of 2021, and is not aware of anything that has happened after that moment. Another common trajectory of criticism is related to ChatGPT's performance in some specific types of problems, e.g. complex calculus with numbers starting from the high 3-digits. However, both of these arguments are based on a limited understanding of how LLMs will actually be used in practice.

Regardless of when LLMs are trained and the training data last updated, their capabilities in interpretation can be used for other problems. In these cases, a LLM is used in conjunction with external data, with the external data bringing in timeliness to the picture. This is actually quite similar to how we as humans consume new information: we can use our existing knowledge to interpret the new information and put it into a relevant context. The same applies to calculus: a LLM should never be used for it, as there are much better fitting tools in the computing toolbox. You wouldn’t hit a nail with a saw either: even though you could probably get the job done with 1000x the expected effort, it was a stupid thing to do.

In part 2, we’ll explore how a deployment architecture for LLMs might look in practice. We’ll also dive deeper into a use case where a LLM is used to power semantic search. At NGP Capital, we’re excited about the strides Generative AI is taking, and how it powers and is embedded to groundbreakingly intelligent products. We also welcome challenging views and comments. For questions or feedback, you can reach me at atte@ngpcap.com.

.svg)

.avif)

.svg)

.avif)

.avif)