Listen now

The adoption of large language models (LLMs) and GenAI apps is a watershed moment for the cybersecurity industry. The world has already witnessed how autonomous agents and generative tools can create a step function increase in productivity across other enterprise functions ranging from software development to customer service.

At NGP Capital, we have been investing in both cybersecurity and enterprise AI for the last 10 years and believe the convergence of these technologies will create outsized value given the criticality of speed and accuracy when responding to cyber threats. Emerging startups that are reimagining the current cyber workflows by building AI-native capabilities from the ground up will grow into the next generation of category leaders. That said, they will need to fend off competition and build proprietary datasets and workflows to stay ahead of incumbents who have already begun to roll out generative capabilities.

The current enterprise cyber model is broken

Today, the cyber workflows and tools for detection, investigation, and response are highly manual and often require offshore labor through managed security service providers like Accenture or IBM to meet the sheer volume of threats. Data is siloed across a sprawl of security tools, SaaS and on-prem apps, and software infrastructure, which leads to blind spots, low productivity, and rising costs.

Security operations have become a constant cat-and-mouse game. The average cost to run an internal security operations center (SOC) has ballooned to greater than $2.8M per year (Ponemon 2020)! Security teams are stretched thin, scrambling to write detection rules and respond to alerts quickly enough to stay ahead of new attack vectors. According to Vectra’s 2023 report, SOC teams receive 4500 alerts per day, which translates to over $3B of annual labor expense. Even with this hefty spending, the same report found that SOC teams are unable to deal with over two-thirds of these alerts, creating an outsized and unsolved gap in enterprise security programs.

Further, the gap between the number of skilled cyber professionals needed and available rose 12.6% globally to hit a record of nearly 4 million in 2023. This dislocation leads security teams to be overworked, under-resourced, and under-trained, which limits their ability to defend themselves against threat actors. The World Economic forum predicts the costs of cybercrime to hit $20T+ globally by 2027, up nearly 4x from 2021.

The potential of LLMs in cyber!

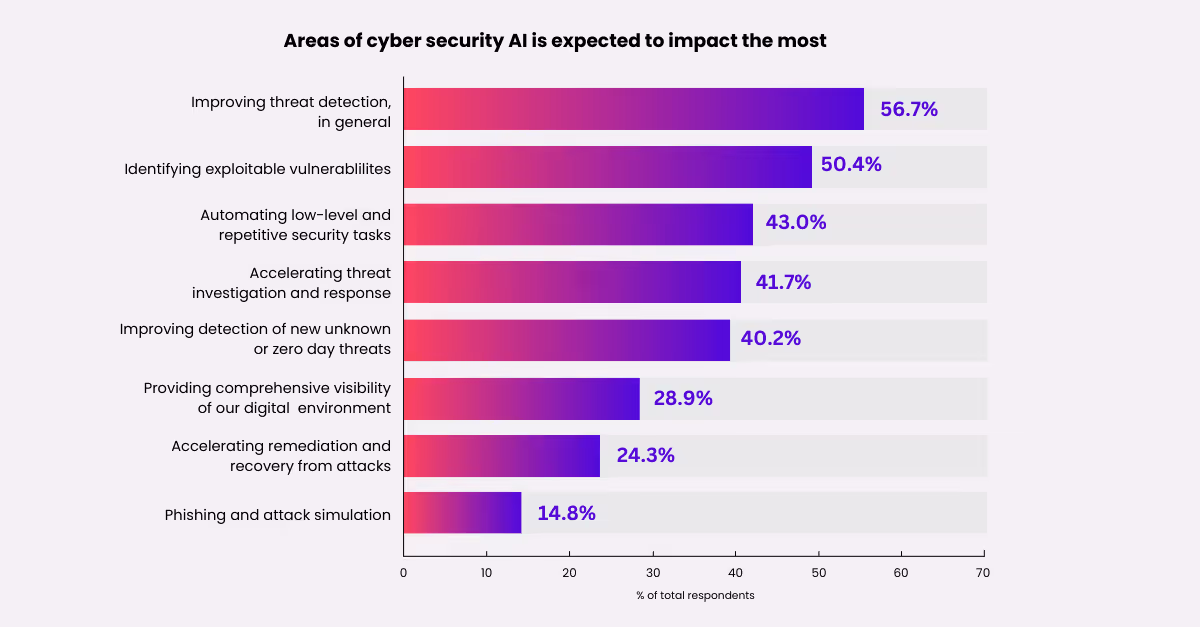

As companies continue to digitize and build complexity in their infrastructure and IT stack, the attack surface will grow and further intensify the need for new modalities of automation. Security operations is just one of multiple segments that is ripe for disruption with generative AI. We’ve identified 5 key areas where generative AI will have the greatest impact in cyber. Let’s dive deeper into some of these categories.

Security Operations & Threat Detection:

LLMs excel in gathering, correlating, and analyzing data from large datasets to identify gaps, autonomously generate detection rules, and prioritize threats based on business risk.

1. Security Knowledge Layer: These platforms ingest logs from existing security tools such as SIEM systems, data security platforms, identity providers, and threat intel feeds to correlate and elevate the alerts that have the highest level of business risk. They have also been architected to leverage and support AI features with vector stores and fine-tuned security models available out-of-the-box, so customers can seamlessly integrate their own AI assets.

2. Threat Exposure Management: By analyzing historical incident data and threat intel feeds, LLMs can predict future attacks and simulate hypothetical scenarios, empowering organizations to proactively strengthen their cyber defenses and minimize the impact of breaches.

Incident Response & Investigation:

Automating incident response with LLMs drastically reduces the time needed to identify the cause of a breach, build incident response (IR) playbooks, and respond to alerts. Emerging startups synthesize complex alerts from disparate tools into a concise summary and response plan that is given to the SOC analyst in plain English—lowering mean time to respond (MTTR) while increasing alert capacity.

Security Awareness & Training:

LLMs can analyze an employee's job role, risk level, and security knowledge to help deliver individualized training materials that are relevant and insightful, while capturing real-time feedback. Adapting training content to different employee personas and experiences enhances overall security awareness, enabling organizations to mitigate the growing risks of highly personalized and more advanced social engineering campaigns.

Cyber GRC & TPRM:

LLMs can automate compliance tasks such as risk assessments, compliance audits, and third-party risk questionnaires, freeing up resources for more critical operations. They can also assist in mapping controls across the increasing number of data privacy and security regulations.

Data Security:

LLMs can classify sensitive data types (IP, financial models, engineering designs) with human-level accuracy. Instead of relying on manual review or superficial markers such as title, document type, or file size, innovators have built large classification models trained on a huge corpus of public data/documents to provide more granular visibility and controls for sensitive information. They also allow users to write data loss prevention (DLP) rules in plain English.

Beware of the risks!

Although LLMs will transform cyber protection, there are serious risks of adversaries harnessing this technology. Malicious actors are already using LLMs to automate reconnaissance, enhance malware scripting, find and exploit vulnerabilities, and create hyper-personalized social engineering campaigns. Microsoft and OpenAI released a report in February 2024 that documented some of the LLM-based tactics they’ve seen from a number of nation state threat actors like Forest Blizzard (Russia) and Emerald Sleet (North Korea). Private threat actors have also weaponized LLMs by releasing fine-tuned models like WormGPT and FraudGPT that can generate malware and exploit unknown vulnerabilities.

Generative AI is poised to revolutionize cybersecurity, addressing key challenges in detection, response, and training while introducing new risks from malicious actors wielding similar tools. Will the cybersecurity industry leverage LLMs to stay ahead, or will it succumb to an AI-fueled wave of more sophisticated attacks?

In the next piece, we'll dive deeper into the five key areas, highlighting innovative approaches from emerging players and revealing the keys to winning. Stay tuned for an exclusive market map of the most disruptive AI-enabled cyber startups.

_________________

You've made it to the end! If you are building a company that is leveraging generative models to reimagine traditional cyber categories, I would love to connect via LinkedIn or email at eric@ngpcap.com. Always happy to chat if you have any feedback related to the post!

.svg)

.svg)