Listen now

Part 1 of this blog series explored the history of Generative AI and LLMs, and how they are integrated into other products. Part 2 takes a more technical approach, investigating LLM deployment in private environments and presenting a generic architecture. We'll also walk you through a use case we built at NGP Capital: a semantic search for textual data on early-stage startups.

Deploying an LLM model in a private environment

When data security, customization, or cost requires an internal deployment of an LLM, open source models become relevant. In general, LLMs can be deployed in the same way to any machine learning models, either to internal compute platform, or to productized services provided by hyperscalers and many startups. The usual examples are AWS Sagemaker, GCP Vertex AI and Azure ML.

Out-of-the-box pre-trained models are provided in repositories such as Huggingface and TensowFlow Hub. When a pre-trained model fits the problem, and most often one does, a model can be directly loaded from a repository and deployed to an endpoint. The endpoint is exposed to consumers as an API, allowing online inference from the model with typically sub-second response times. The services used to deploy models take care of practical issues, such as latency, high availability, and scaling. Often, cost is something to keep an eye out for as nearly infinitely scaling services with large hardware can quickly create quite a hefty bill if left unchecked.

The most important phase is testing: even when a model provides the output required, the performance of different models often varies a lot. It’s important to note that you don’t typically need a data scientist to test and deploy a model: after all, you’re just deploying and consuming it. This expands access to AI, as operationalization does not necessarily require the cost and effort associated with hiring or involving a data scientist. We expect this to be a massive enabler for use of AI in products: a basic implementation is now often just a normal software engineering and DevOps task.

Sometimes, either to fit the model with an exact problem or to increase its performance, you need to bring in your data scientist. Even with one, you typically don’t develop your own LLM; instead, you either take an open source model and customize it. Alternatively, you train and deploy a downstream model to increase model performance in a more narrow context. Doing either of these requires more specialized knowledge, but it’s important to be aware that the open source models provide a great starting point, decreasing the amount of effort required by at least an order of magnitude.

.avif)

This also more generally represents our view of how the market will play out, with an application layer forming on top of a model layer, and an infrastructure layer powering both. Products in the layers move from specific to generic: applications are created by separate companies typically, and serve specific use cases, such as code generation or copywriting, whereas models, whether open or closed source, support a wide variety of use cases, products and applications built on top. In the infrastructure layer, even more generic vendors, including the hyperscalers, often support Generative AI among other use cases.

We mentioned the issue of data security in part 1, but we’ll now take a better look into it. Using a commercial API provided as a service, you often agree for the provider to use your data to improve model performance, which practically means making your data part of their training data. This might not be the biggest information security risk out there, since exact input data will be nearly impossible to prompt from the LLM. However, it needs to be considered, in the context of organizational policies on data security, confidentiality in general, as well as legislation, especially in case of sensitive data such as PII (Personal Identifiable Information).

Sample Use Case: Semantic Search at NGP Capital

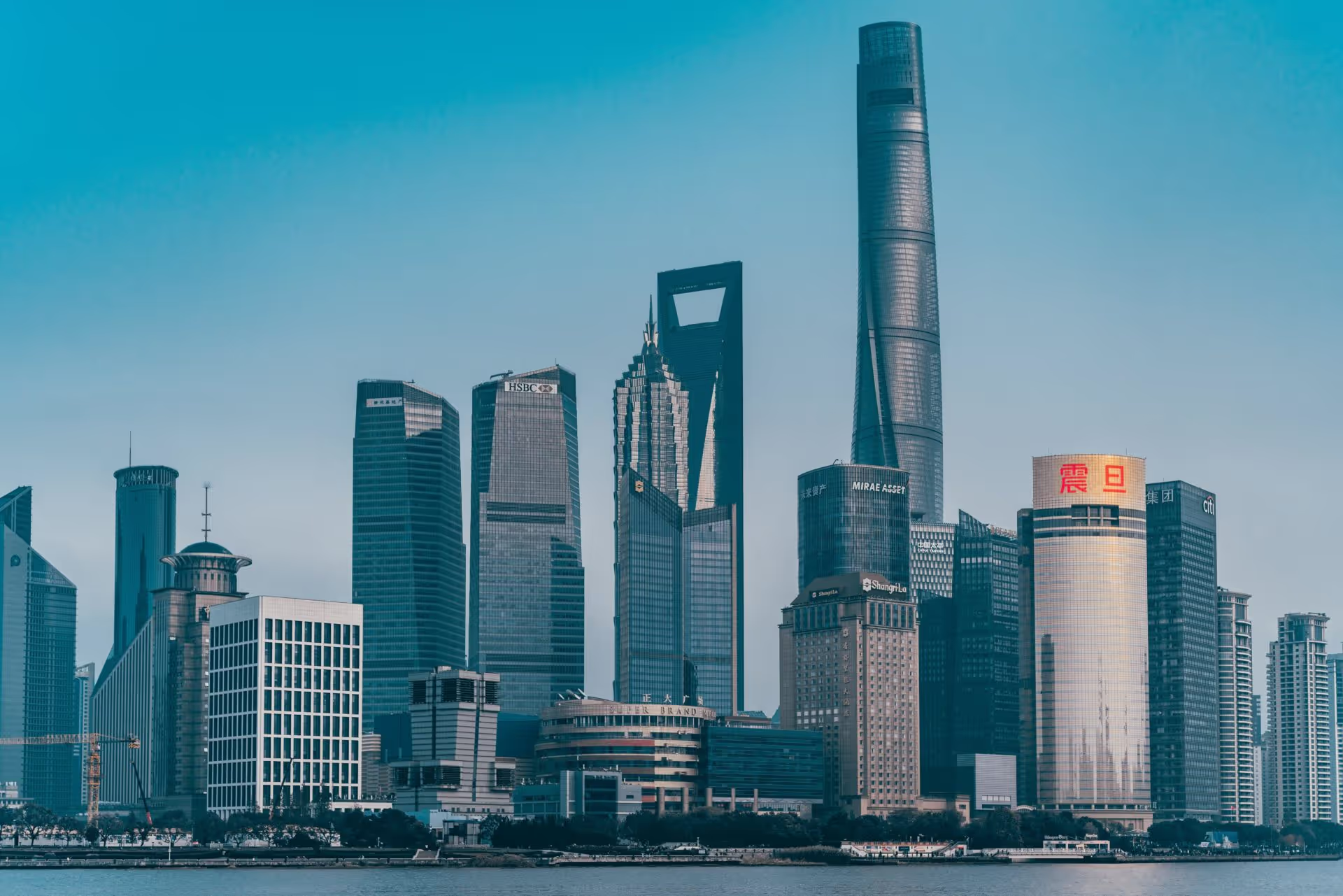

At NGP Capital, we invest in early-stage startups with exceptional ideas that can shape a better future. Our thesis is the great convergence because we believe the physical and virtual are finally coming together. As such, we want to find companies that are relevant for our thesis, but we also want to understand the market in a more granular level: what markets and submarkets are relevant to the thesis of convergence, and which growth companies are relevant in each of those spaces.

To do that, we have a lot of textual data on startups. Let’s assume we have a single text describing each company: the question is, how can we compare these company descriptions effectively?

Use cases we want to solve include at least the following:

- How to find competitors for any company? Based on textual data, which companies are solving same kind of problems for their customers?

- How to find companies that work with a certain problem? For example, how can we find companies that do something related to “data quality monitoring”, often without using those exact words.

Every company doing the same thing has their own way of describing it, and traditional approaches to Natural Language Processing (NLP) are driven by concepts such as keywords and term frequencies, which often perform quite badly in the ambiguousness of the daily use of language. Ultimately, we want to understand which texts in our set of 100 000s of texts are describing similar products and technologies for similar use cases, without necessarily using the same wording.

.avif)

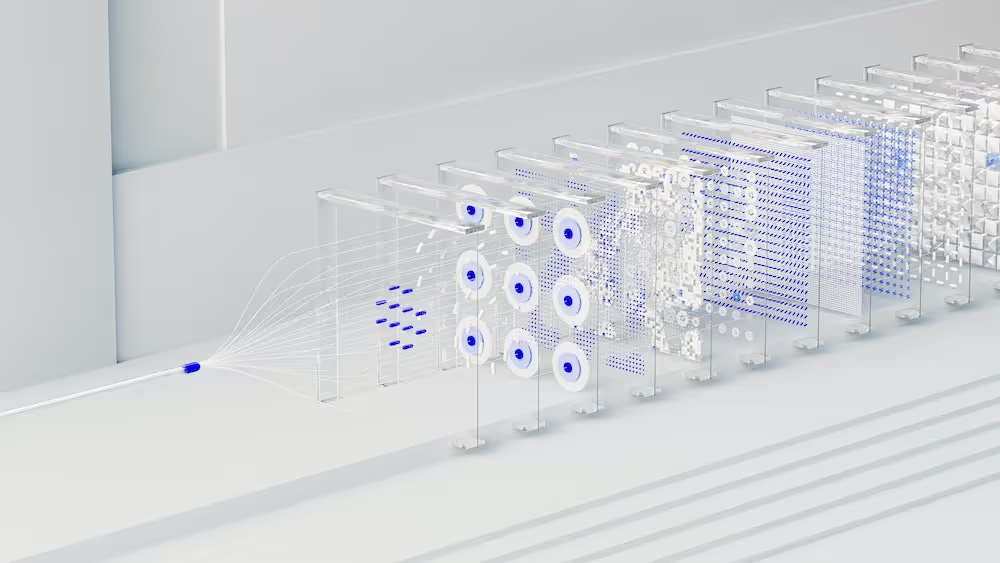

To achieve this, we’ve deployed a LLM model that we use to convert company descriptions into vectors called embeddings to a private endpoint. Embedding is a generic mathematical term, describing a structure contained within another structure. The input to our API is a text, the output a 2048-item embedding vector. In practice, it’s a random-looking set of 2048 numbers that have no meaning for a human observer. However, a LLM model, extracting and storing the meaning from any text to numbers in a similar way, can interpret the meaning of this vector. This interpretability of vectors is what makes the approach work: embeddings do not represent words, they represent meaning.

Text, in this case, company descriptions, can’t be used for calculus, but vectors can. Now, we have a single 2048-item vector to represent every single company in our data. There are a lot of ways to compare vectors to each other and for example, our first use case, finding competitors for a company, we just search the set of vectors that are closest to the one we are looking competitors for. This is done by calculating a metric called cosine similarity between all our 100 000s of vectors. It might sound outlandish, but for any practitioner is a standard operation of vector calculus. As discussed above, LLMs would never be used for this type of calculation.

To search our company embedding dataset with arbitrary search phrases, we can’t use batch operations, since our team expects real-time interaction. Therefore, we have stored all our company vector embeddings in Elasticsearch, a database optimized for quick searches. For any new search phrase, input in real-time through our search UI, we use our API to get its vector embedding in real time and use Elasticsearch to compare the new embedding against our database of company embeddings. All this takes less than a second, delivering the user an immediate result of relevant companies.

Doing all this, we’ve used LLMs in conjunction with a set of tools from the more traditional compute toolbox, selecting the correct tool to solve every part of the problem. This is a good example of how LLMs are used in an operational setup, using them to solve only the part of the problem they do better than any other tool. Also, this shows a way of solving the timeliness problem: the pre-trained algorithm itself has not been trained with our data, and knows nothing about our companies. Nevertheless, in a similar way, GPT models could be used with data more recent than the training dataset, we can use our algorithm to extract the meaning of data it has never seen before.

What comes next? The ecosystem that is forming around LLMs

The breakout LLM story in media is the battle between OpenAI/Microsoft and Google. However, we at NGP Capital tend to be more interested in what is happening one level below. There is an ecosystem of early-stage startups forming around LLMs, with products built both for horizontal tooling that helps customers to embed LLMs as part of their own products and solutions, as well as vertically-oriented products solving more specific business problems. Horizontal markets forming around LLMs include at least synthetic data, AI safety & security, and MLops platforms specifically designed for LLMs. Looking at the vertical use cases, problems such as copywriting, code generation, and customer support seem to be ahead of the pack.

An example of a market forming around LLMs, very closely related to the discussion above, are vector databases. They are specialized databases designed to index, store and quickly retrieve embeddings, and to support exactly the kind of use cases as the NGP Capital semantic search described above. As vector embeddings are the standard way of extracting and storing meaning from unstructured data, having an optimized toolkit for embeddings makes intuitive sense. At NGP we’ll keep a close eye on these sub-domains as they provide critical components to the LLM ecosystems of the future.

At NGP Capital, we’re excited about the strides Generative AI is taking, and how it powers and is embedded in groundbreakingly intelligent products. We also welcome challenging views and comments. For questions or feedback, you can reach me at atte@ngpcap.com.

.svg)

.svg)

.avif)

.avif)

.avif)