Listen now

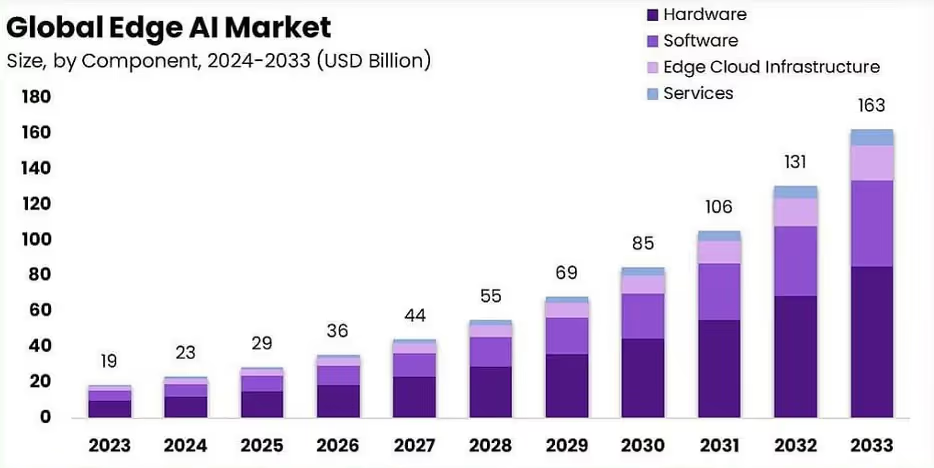

AI is rapidly transforming every industry, and edge computing is no exception. Over the next decade, the number of AI workloads running at or near the data source is expected to grow at an unprecedented pace. Often referred to as “edge AI,” this approach encompasses everything from complex deployments on cameras, robots, and smartphones to constrained environments like low-power IoT devices. According to the 2024 Market.us report, the Edge AI market is on track to exceed $160 billion by 2030, with a projected compound annual growth rate (CAGR) of 24%, underscoring the fast expansion of this sector.

By processing data at or near the source, edge AI dramatically reduces latency, conserves network bandwidth, and enhances data privacy. These benefits are driving innovation across a range of applications, from real-time anomaly detection in industrial automation to personalized healthcare monitoring and advanced network analytics.

Despite this promising outlook, edge AI has not always lived up to its potential. One key obstacle has been the complexity introduced by the diversity of edge hardware, making it difficult to develop software that seamlessly runs across multiple devices. Early implementations also tended to focus on narrow, use-case-specific deployments, limiting both interoperability and scalability.

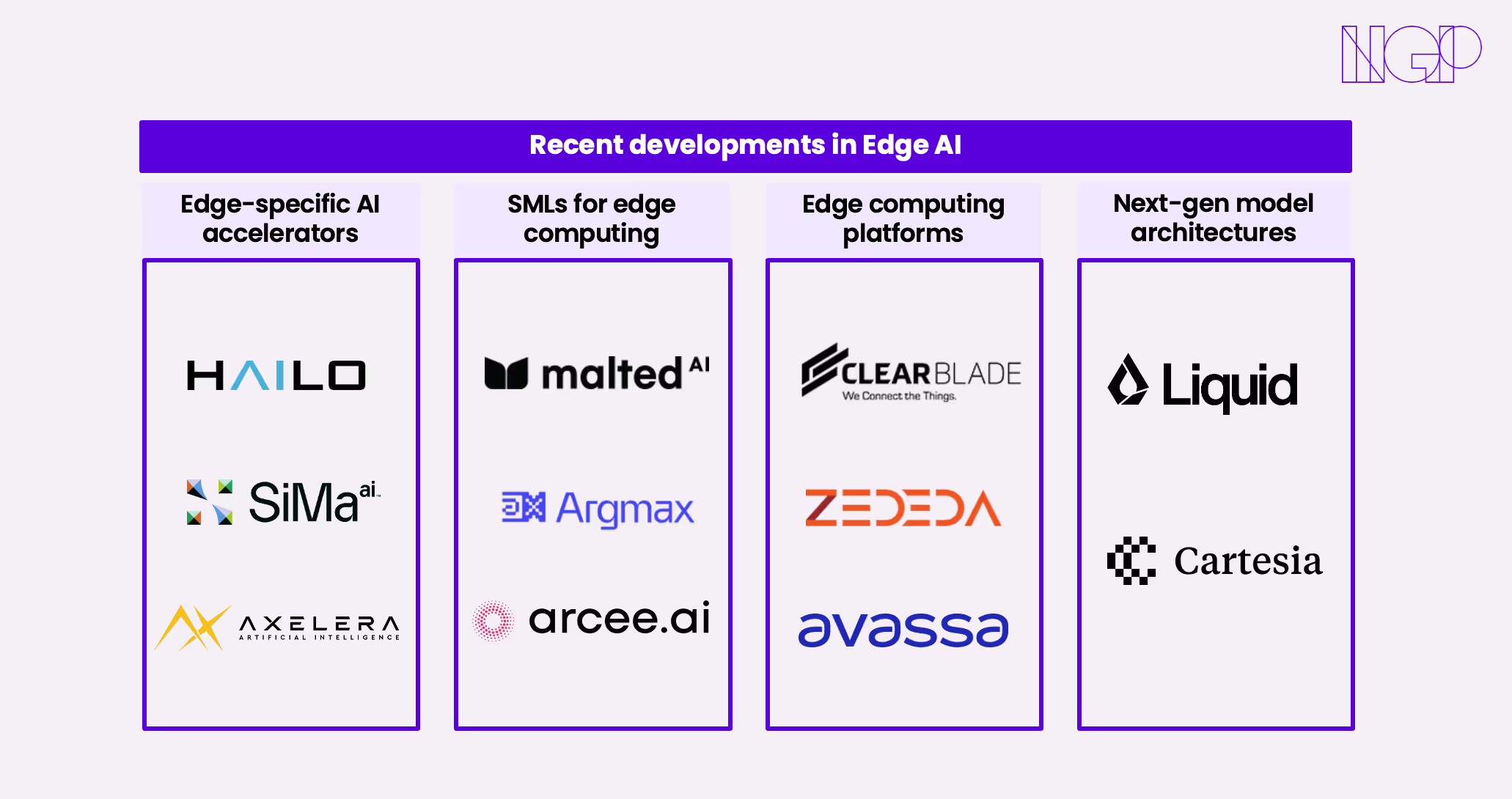

Yet, momentum is building. Below, I outline four developments shaping edge AI: the growing accessibility of specialized AI accelerators, the rise of smaller foundation models fueling broader deployments, platforms tackling the complexities of scaling AI on edge devices, and new model architectures.

Edge-specific AI accelerators are on the path to becoming mainstream

Unlike general-purpose GPUs, edge-specific AI accelerators prioritize energy efficiency and high performance per watt, without significantly compromising how many trillions of operations per second can perform (TOPS). This advantage is critical for battery and memory-constrained devices that need to run resource-intensive AI workloads without compromising on speed.

These accelerators are transitioning from being a niche or emerging technology to being used in real-life applications, and several startups have started shipping their products. Hailo AI, for instance, has released benchmarks showing its accelerator outperforming NVIDIA’s Jetson (the GPU family designed for IoT), delivering higher TOPS at just a quarter of the power consumption for targeted deep learning tasks. A growing ecosystem of startups is offering not only these high-performance chips but also no-code software platforms, making it simpler for developers to build, deploy, and scale machine learning deployments on the edge without deep hardware expertise.

Sima AI, which developed a software ecosystem on top of its chip infrastructure, recently launched a partnership with AWS offering developers the ability to test and deploy models on their ML System on Chip (SoC) hosted in the cloud. They also partnered with Trumpf to embed AI chips into laser systems for real-time monitoring of weld quality, a clear illustration of how edge accelerators are beginning to transform traditional industries.

Rapid growth of small models will accelerate AI deployments on the edge

The second trend reshaping edge AI is the explosion of Small Language Models (SLMs). A few years ago, running advanced AI models at the edge often required high-power hardware. Today, a wave of open-source and proprietary models built with fewer than 10 billion parameters, or compressed versions of larger models, are delivering performance on par with bigger models for domain-specific tasks.

Enterprises are increasingly drawn to these “small but mighty” models because they offer lower operational costs, faster inferences, and more robust data privacy, since less data needs to move off-device. Innovations in model compression and optimization through techniques like quantization, pruning, and knowledge distillation, further enhance their viability for edge deployments.

For example, the YOLO family of computer vision models runs real-time object detection with just a few million parameters, while Microsoft’s Phi-4, a 14B-parameter model, excels at reasoning skills in math and language tasks. On the proprietary side, startups such as Malted and Arcee AI help enterprises build and deploy their own small models using proprietary data, while Argmax and Multiverse are focusing on compression platforms that could enable large-scale production deployments on commodity hardware.

These lightweight models unlock numerous possibilities, from drone-based environmental monitoring to intelligent city infrastructure. We expect SLMs to continue to push the frontier of size vs. quality.

The emergence of edge platforms may solve scaling bottlenecks

Operationalizing AI on diverse edge devices, each with unique hardware constraints, is inherently more complex than managing a centralized cloud environment. Several startups aim to simplify this by developing abstraction layers so developers can build and manage applications without custom-coding for every single device. Companies like Zededa, Avassa and ClearBlade handle tasks such as fleet-wide AI model deployment, updates, configuration management, and real-time performance monitoring from a centralized platform. Generative AI is also finding its way into these solutions. For instance, ClearBlade has introduced an AI assistant that streamlines the setup of new use cases for non-technical staff.

Nokia’s MX Industrial Edge is also a prime example of a platform that reduces lndustrial loT deployment complexity. With high processing capacity, it ensures data security and sovereignty, meets the latency needs mission-critical OT processes and capable of running various industrial AI applications.

As these platforms mature, they can reduce the barriers to large-scale adoption and unlock new AI-driven services at the edge.

New model architectures

Next-generation neural architectures could further accelerate AI deployments at the edge. For example, Liquid Neural Networks (LNNs) adapt in real time to new data, making them valuable for autonomous systems or fast-changing industrial environments. State Space Models(SSMs) excel at understanding and predicting how systems change over time and can streamline tasks like predictive maintenance or anomaly detection. Emerging players such as Liquid AI and Cartesia are piloting these architectures in real-world environments.

Innovations in hardware, model design, and operational frameworks are expanding the range of applications that can run on the edge. If you're building in this space or have thoughts to share, reach out to me at fernanda@ngpcap.com. I’m always happy to connect and hear your feedback!

.svg)

.svg)

.avif)